data breaches

Hardware-Level Cybersecurity: How to Stop Root-of-Trust Exploits

Secret Blizzard’s embassy campaign shows why device trust beats TLS trust alone—and how to harden firmware, keys, and boot chains

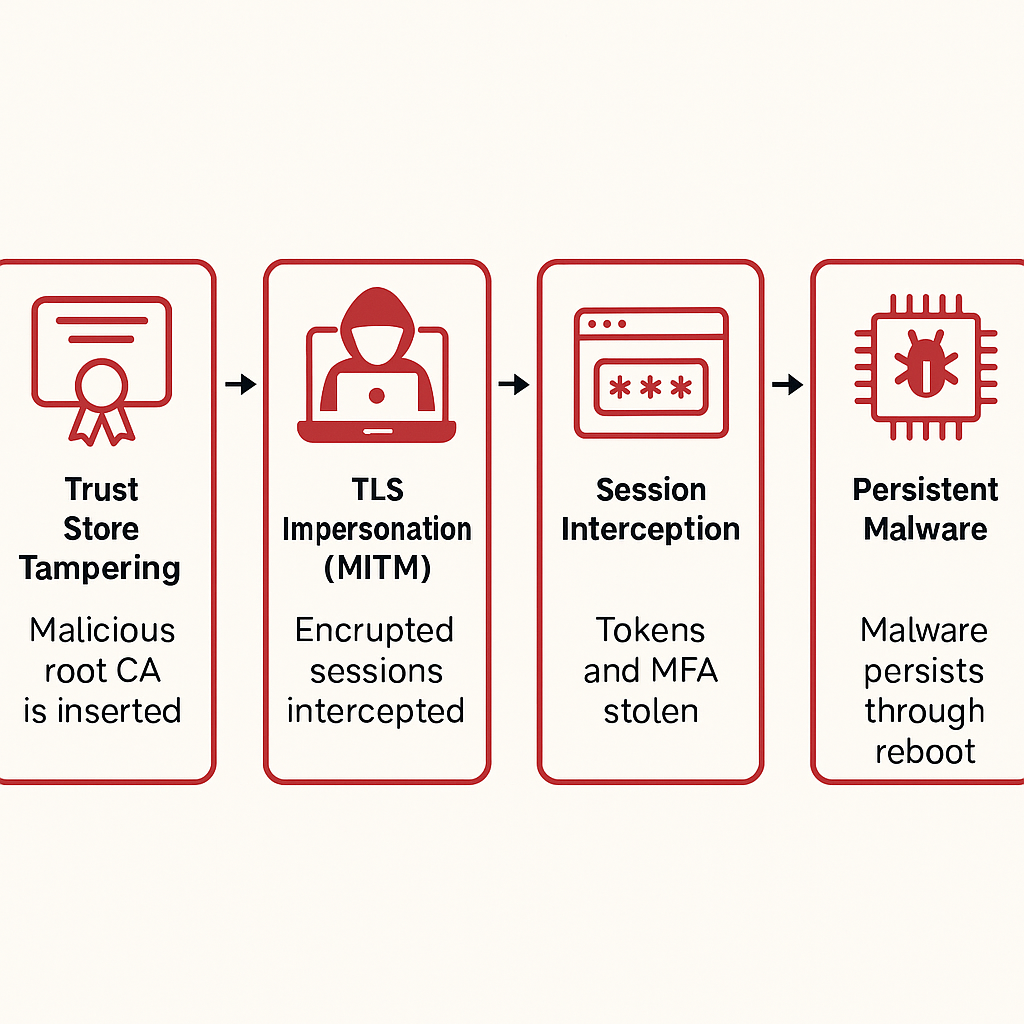

A new expert analysis warns that root-of-trust (RoT) compromises can neutralize MFA and FIDO protections by subverting certificate trust and boot integrity. The campaign—linked to Secret Blizzard—demonstrates that once a system’s trust anchor is controlled, attackers can man-in-the-middle “secure” sessions and persist below the OS. This guide details practical defenses and standards-based controls.

A recent case study shows Russian-backed Secret Blizzard bypassing MFA at foreign embassies by tampering with the root of trust—the very mechanism devices use to decide what (and whom) to trust online. Instead of phishing credentials, attackers inserted a rogue root certificate and intercepted encrypted traffic without warnings, proving that TLS-anchored MFA fails when the device’s trust store is compromised.

What Happened

- Attack essence: Control the victim’s local trust anchor (root CA / trust store) → impersonate sites via MITM → harvest tokens, cookies, and MFA flows without browser alerts.

- Why it matters: FIDO/WebAuthn assume authentic TLS. If TLS validation is subverted, MFA loses its authenticity check.

- Who’s at risk: Any org that relies solely on TLS + MFA without device-bound credentials, firmware integrity, and independent attestation—especially governments, cloud operators, finance, and enterprises with high-risk network locales.

“A root-of-trust compromise undermines all TLS-based protections, including FIDO-based MFA.” — The Hacker News expert analysis summarizing the campaign. The Hacker News

“Platform firmware must be protected, corruption detected, and recovery ensured in the event of compromise.” — NIST SP 800-193 (Platform Firmware Resiliency). NIST Publications

“Treat firmware and trust stores as live attack surfaces. Bind credentials to hardware, enforce measured boot, and continuously attest device state—or assume your MFA can be silently routed.” — El Mostafa Ouchen, cybersecurity author and analyst.

Technical Deep Dive

1) Root-of-Trust Attack Flow

- Trust Store Tampering: Adversary adds a malicious root CA or manipulates the device PKI.

- TLS Impersonation (MITM): The attacker issues leaf certs for target domains. The browser accepts them because the rogue root is trusted.

- Session Interception: Harvest SAML/OIDC tokens, cookies, and challenge/response flows—even with WebAuthn/FIDO—because the browser “thinks” it’s talking to the real site.

2) Why Firmware & Boot Matter

Above the OS, EDRs and browsers can’t see a poisoned trust anchor set during early boot or via privileged management engines. UEFI/firmware persistence was proven feasible by LoJax, the first in-the-wild UEFI rootkit, showing long-lived pre-OS footholds.

3) Controls That Actually Help

- Device-bound, non-exportable keys (TPM/Secure Enclave/Pluton): Keys never leave hardware; sign-in requires the physical device.

- Measured & Verified Boot: Record each boot stage in hardware and verify with policies; quarantine on mismatch. Follow NIST SP 800-193 for protect-detect-recover.

- Independent Root of Trust for Credentials: Co-sign credentials with both the device and the identity cloud, so a tampered local trust store can’t forge identity.

- Mutual Cryptographic Verification: Device ↔️ IdP both attest to each other beyond TLS (e.g., hardware signals + cloud policy).

- Continuous Session Risk Checks: Re-evaluate device posture and revoke access mid-session on trust drift (rogue CA detected, boot log mismatch).

MITRE ATT&CK Mapping (selected)

- Initial Access: Valid Accounts via session hijack (T1078), Exploit Trusted Relationship (T1199).

- Defense Evasion: Modify Authentication Process / Subvert Trust Controls (T1556.004), Subvert Trust Controls (T1553).

- Credential Access: Web session cookie theft (T1539 via MITM).

- Persistence: Modify Boot/UEFI (T1542.003).

- Command & Control: Web protocols over TLS (T1071.001).

(IDs aligned to Enterprise matrix; exact sub-techniques vary by environment.)

Impact & Response

Impact: Stolen sessions, bypassed MFA, and durable persistence if boot firmware is altered. Government and regulated sectors face heightened compliance and reporting exposure given device trust failures.

Immediate actions (step-by-step):

- Inventory & lock trust stores: Alert on new root CAs; require admin-approval workflows + logging for CA changes.

- Turn on measured/verified boot across fleets; export boot measurements to an attestation service.

- Bind credentials to hardware: Enforce TPM/Secure Enclave/Pluton-backed keys; disable fallbacks to exportable secrets.

- Session protection: Short-lived tokens, continuous re-auth on posture drift, and token binding where available.

- Firmware discipline: Apply OEM updates; enable write protection on SPI flash; require signed UEFI capsules; monitor for UEFI variable anomalies.

- Isolation on suspicion: If rogue CAs or boot mismatches are detected, block access, capture measurements, and route the device to firmware re-flash / secure recovery.

Background & Context

- Real-world precedent: LoJax proved UEFI persistence in the wild (Sednit/Fancy Bear), making below-OS implants a practical threat.

- Raising the baseline: Vendors are pushing hardware roots like Microsoft Pluton to bring TPM-class security inside the CPU and enable simpler, updateable attestation at scale.

What’s Next

Expect wider adoption of hardware-anchored identity, token binding, and continuous device attestation—and likely policy mandates in government and critical infrastructure to implement NIST’s protect-detect-recover firmware model. For defenders, the lesson is clear: move trust from the network perimeter into silicon.

Root-of-Trust (RoT) Defense Checklist for CISOs & IT Teams

1. Firmware & Boot Integrity

- ✅ Enable Secure Boot + Verified Boot on all endpoints.

- ✅ Turn on measured boot and forward logs to an attestation service (e.g., Microsoft Defender ATP or custom MDM).

- ✅ Apply NIST SP 800-193 Protect–Detect–Recover guidance: enable rollback protection, watchdogs, and signed firmware updates.

2. Credential Binding

- ✅ Require TPM/Secure Enclave/Pluton for storing keys (disable exportable software keys).

- ✅ Enforce device-bound FIDO2 credentials in identity providers (Azure AD, Okta, Google Workspace).

- ✅ Turn off legacy MFA fallback (e.g., SMS or OTP that bypass hardware).

3. Trust Store & Certificates

- ✅ Monitor for rogue root certificates in Windows/Mac/Linux trust stores.

- ✅ Enforce admin-only CA installs with logging and SIEM integration.

- ✅ Run weekly CA inventory scans; alert on any unauthorized roots.

4. Session Protection

- ✅ Enable token binding where supported (ties session to device).

- ✅ Enforce short-lived tokens (e.g., 10–15 min for critical apps).

- ✅ Turn on continuous risk evaluation—revoke sessions on CA mismatch or boot measurement drift.

5. Supply-Chain & Device Controls

- ✅ Use OEM-signed firmware only; enable capsule update verification.

- ✅ Lock SPI flash where hardware supports it.

- ✅ Segment management engines (iLO, iDRAC, BMC) into separate VLANs with strict ACLs.

6. Incident Response Playbook

- ✅ Isolate any device with trust-store anomalies or boot log mismatch.

- ✅ Re-flash firmware with vendor images, not OS reinstalls (malware may survive).

- ✅ Rotate all keys and certificates tied to that device.

- ✅ Conduct a forensic review of boot/firmware logs for persistence artifacts.

📌 Pro Tip from El Mostafa Ouchen:

“Defenders must treat trust anchors—firmware, secure boot, TPMs—not as passive baselines but as active attack surfaces. Building continuous attestation pipelines is the only way to catch RoT drift before adversaries turn MFA into a bypassed formality.”

Sources:

- The Hacker News — Expert Insights (Aug 18, 2025): Secret Blizzard’s RoT attack path and countermeasures (device-bound credentials, independent roots, mutual verification, continuous checks). The Hacker News

- NIST SP 800-193 (2018): Platform Firmware Resiliency—protect, detect, recover model; measured/verified boot guidance. NIST Publications

- ESET (LoJax, 2018): First in-the-wild UEFI rootkit demonstrating pre-OS persistence risk. web-assets.esetstatic.comwelivesecurity.com

- Microsoft Pluton (2025 docs): Silicon-level root of trust, TPM 2.0 functionality, and updateable hardware security. Microsoft Learn+1TECHCOMMUNITY.MICROSOFT.COM

data breaches

Cloudflare Outage Disrupts Global Internet — Company Restores Services After Major Traffic Spike

November 18, 2025 — MAG212NEWS

A significant outage at Cloudflare, one of the world’s leading internet infrastructure providers, caused widespread disruptions across major websites and online services on Tuesday. The incident, which began mid-morning GMT, temporarily affected access to platforms including ChatGPT, X (formerly Twitter), and numerous business, government, and educational services that rely on Cloudflare’s network.

According to Cloudflare, the outage was triggered by a sudden spike in “unusual traffic” flowing into one of its core services. The surge caused internal components to return 500-series error messages, leaving users unable to access services across regions in Europe, the Middle East, Asia, and North America.

Impact Across the Web

Because Cloudflare provides DNS, CDN, DDoS mitigation, and security services for millions of domains — powering an estimated 20% of global web traffic — the outage had swift and wide-reaching effects.

Users reported:

- Website loading failures

- “Internal Server Error” and “Bad Gateway” messages

- Slowdowns on major social platforms

- Inaccessibility of online tools, APIs, and third-party authentication services

The outage also briefly disrupted Cloudflare’s own customer-support portal, highlighting the interconnected nature of the company’s service ecosystem.

Cloudflare’s Response and Restoration

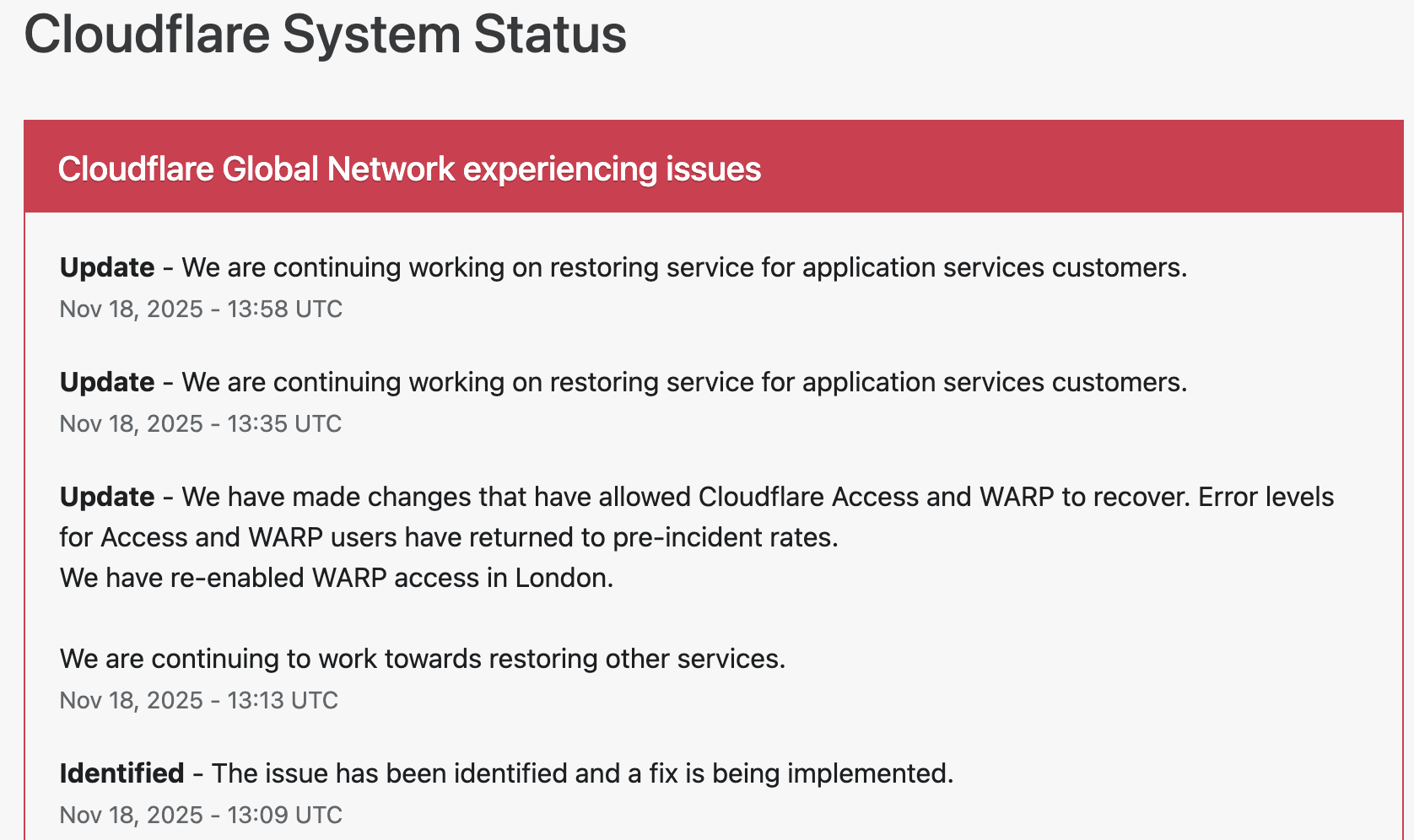

Cloudflare responded within minutes, publishing updates on its official status page and confirming that engineering teams were investigating the anomaly.

The company took the following steps to restore operations:

1. Rapid Detection and Acknowledgement

Cloudflare engineers identified elevated error rates tied to an internal service degradation. Public communications were issued to confirm the outage and reassure customers.

2. Isolating the Affected Systems

To contain the disruption, Cloudflare temporarily disabled or modified specific services in impacted regions. Notably, the company deactivated its WARP secure-connection service for users in London to stabilize network behavior while the fix was deployed.

3. Implementing Targeted Fixes

Technical teams rolled out configuration changes to Cloudflare Access and WARP, which successfully reduced error rates and restored normal traffic flow. Services were gradually re-enabled once systems were verified stable.

4. Ongoing Root-Cause Investigation

While the unusual-traffic spike remains the confirmed trigger, Cloudflare stated that a full internal analysis is underway to determine the exact source and prevent a recurrence.

By early afternoon UTC, Cloudflare confirmed that systems had returned to pre-incident performance levels, and affected services worldwide began functioning normally.

Why This Matters

Tuesday’s outage underscores a critical truth about modern internet architecture: a handful of infrastructure companies underpin a massive portion of global online activity. When one of them experiences instability — even briefly — the ripple effects are immediate and worldwide.

For businesses, schools, governments, and content creators, the incident is a reminder of the importance of:

- Redundant DNS/CDN providers

- Disaster-recovery and failover plans

- Clear communication protocols during service outages

- Vendor-dependency risk assessments

Cloudflare emphasized that no evidence currently points to a cyberattack, though the nature of the traffic spike remains under investigation.

Looking Ahead

As Cloudflare completes its post-incident review, the company is expected to provide a detailed breakdown of the technical root cause and outline steps to harden its infrastructure. Given Cloudflare’s central role in global internet stability, analysts say the findings will be watched closely by governments, cybersecurity professionals, and enterprise clients.

For now, services are restored — but the outage serves as a powerful reminder of how interconnected and vulnerable the global web can be.

data breaches

Cloudflare Outage Analysis: Systemic Failure in Edge Challenge Mechanism Halts Global Traffic

SAN FRANCISCO, CA — A widespread disruption across major internet services, including AI platform ChatGPT and social media giant X (formerly Twitter), has drawn critical attention to the stability of core internet infrastructure. The cause traces back to a major service degradation at Cloudflare, the dominant content delivery network (CDN) and DDoS mitigation provider. Users attempting to access affected sites were met with an opaque, yet telling, error message: “Please unblock challenges.cloudflare.com to proceed.”

This incident was not a simple server crash but a systemic failure within the crucial Web Application Firewall (WAF) and bot management pipeline, resulting in a cascade of HTTP 5xx errors that effectively severed client-server connections for legitimate users.

The Mechanism of Failure: challenges.cloudflare.com

The error message observed globally points directly to a malfunction in Cloudflare’s automated challenge system. The subdomain challenges.cloudflare.com is central to the company’s security and bot defense strategy, acting as an intermediate validation step for traffic suspected of being malicious (bots, scrapers, or DDoS attacks).

This validation typically involves:

- Browser Integrity Check (BIC): A non-invasive test ensuring the client browser is legitimate.

- Managed Challenge: A dynamic, non-interactive proof-of-work check.

- Interactive Challenge (CAPTCHA): A final, user-facing verification mechanism.

In a healthy system, a user passing through Cloudflare’s edge network is either immediately granted access or temporarily routed to this challenge page for verification.

During the outage, however, the Challenge Logic itself appears to have failed at the edge of Cloudflare’s network. When the system was invoked (likely due to high load or a misconfiguration), the expected security response—a functional challenge page—returned an internal server error (a 500-level status code). This meant:

- The Request Loop: Legitimate traffic was correctly flagged for a challenge, but the server hosting the challenge mechanism failed to process or render the page correctly.

- The

HTTP 500Cascade: Instead of displaying the challenge, the Cloudflare edge server returned a “500 Internal Server Error” to the client, sometimes obfuscated by the text prompt to “unblock” the challenges domain. This effectively created a dead end, blocking authenticated users from proceeding to the origin server (e.g., OpenAI’s backend for ChatGPT).

Technical Impact on Global Services

The fallout underscored the concentration risk inherent in modern web architecture. As a reverse proxy, Cloudflare sits between the end-user and the origin server for a vast percentage of the internet.

For services like ChatGPT, which rely heavily on fast, secure, and authenticated API calls and constant data exchange, the WAF failure introduced severe latency and outright connection refusal. A failure in Cloudflare’s global network meant that fundamental features such as DNS resolution, TLS termination, and request routing were compromised, leading to:

- API Timeouts: Applications utilizing Cloudflare’s API for configuration or deployment experienced critical failures.

- Widespread Service Degradation: The systemic 5xx errors at the L7 (Application Layer) caused services to appear “down,” even if the underlying compute resources and databases of the origin servers remained fully operational.

Cloudflare’s official status updates confirmed they were investigating an issue impacting “multiple customers: Widespread 500 errors, Cloudflare Dashboard and API also failing.” While the exact trigger was later traced to an internal platform issue (in some historical Cloudflare incidents, this has been a BGP routing error or a misconfigured firewall rule pushed globally), the user-facing symptom highlighted the fragility of relying on a single third-party for security and content delivery on a global scale.

Mitigation and the Single Point of Failure

While Cloudflare teams worked to roll back configuration changes and isolate the fault domain, the incident renews discussion on the “single point of failure” doctrine. When a critical intermediary layer—responsible for security, routing, and caching—experiences a core logic failure, the entire digital economy resting on it is exposed.

Engineers and site reliability teams are now expected to further scrutinize multi-CDN and multi-cloud strategies, ensuring that critical application traffic paths are not entirely dependent on a single third-party’s edge infrastructure, a practice often challenging due to cost and operational complexity. The “unblock challenges” error serves as a stark reminder of the technical chasm between a user’s browser and the complex, interconnected security apparatus that underpins the modern web.

data breaches

Manufacturing Software at Risk from CVE-2025-5086 Exploit

Dassault Systèmes patches severe vulnerability in Apriso manufacturing software that could let attackers bypass authentication and compromise factories worldwide.

A newly disclosed flaw, tracked as CVE-2025-5086, poses a major security risk to manufacturers using Dassault Systèmes’ DELMIA Apriso platform. The bug could allow unauthenticated attackers to seize control of production environments, prompting urgent patching from the vendor and warnings from cybersecurity experts.

A critical vulnerability in DELMIA Apriso, a manufacturing execution system used by global industries, could let hackers bypass authentication and gain full access to sensitive production data, according to a security advisory published this week.

Dassault Systèmes confirmed the flaw, designated CVE-2025-5086, affects multiple versions of Apriso and scored 9.8 on the CVSS scale, placing it in the “critical” category. Researchers said the issue stems from improper authentication handling that allows remote attackers to execute privileged actions without valid credentials.

The company has released security updates and urged immediate deployment, warning that unpatched systems could become prime targets for industrial espionage or sabotage. The flaw is particularly alarming because Apriso integrates with enterprise resource planning (ERP), supply chain, and industrial control systems, giving attackers a potential foothold in critical infrastructure.

- “This is the kind of vulnerability that keeps CISOs awake at night,” said Maria Lopez, industrial cybersecurity analyst at Kaspersky ICS CERT. “If exploited, it could shut down production lines or manipulate output, creating enormous financial and safety risks.”

- “Manufacturing software has historically lagged behind IT security practices, making these flaws highly attractive to threat actors,” noted James Patel, senior researcher at SANS Institute.

- El Mostafa Ouchen, cybersecurity author, told MAG212News: “This case shows why manufacturing execution systems must adopt zero-trust principles. Attackers know that compromising production software can ripple across supply chains and economies.”

- “We are actively working with customers and partners to ensure systems are secured,” Dassault Systèmes said in a statement. “Patches and mitigations have been released, and we strongly recommend immediate updates.”

Technical Analysis

The flaw resides in Apriso’s authentication module. Improper input validation in login requests allows attackers to bypass session verification, enabling arbitrary code execution with administrative privileges. Successful exploitation could:

- Access or modify production databases.

- Inject malicious instructions into factory automation workflows.

- Escalate attacks into connected ERP and PLM systems.

Mitigations include applying vendor patches, segmenting Apriso servers from external networks, enforcing MFA on supporting infrastructure, and monitoring for abnormal authentication attempts.

Impact & Response

Organizations in automotive, aerospace, and logistics sectors are particularly exposed. Exploited at scale, the vulnerability could cause production delays, supply chain disruptions, and theft of intellectual property. Security teams are advised to scan their environments, apply updates, and coordinate incident response planning.

Background

This disclosure follows a string of high-severity flaws in industrial and operational technology (OT) software, including vulnerabilities in Siemens’ TIA Portal and Rockwell Automation controllers. Experts warn that adversaries—ranging from ransomware gangs to state-sponsored groups—are increasingly focusing on OT targets due to their high-value disruption potential.

Conclusion

The CVE-2025-5086 flaw underscores the urgency for manufacturers to prioritize cybersecurity in factory software. As digital transformation accelerates, securing industrial platforms like Apriso will be critical to ensuring business continuity and protecting global supply chains.