news tech

Nova H1 Audio Earrings: Unraveling the Kamala Harris Conspiracy

The internet is no stranger to conspiracy theories, and the latest in the mix involves Vice President Kamala Harris and a pair of tech-infused earrings. Following a debate between Harris and Donald Trump, claims surfaced on social media that the Vice President was wearing Nova H1 Audio Earrings, a pair of Bluetooth-enabled devices disguised as pearl earrings. But is there any truth to these allegations, or is this another unfounded internet rumor?

The Conspiracy Explained

Several social media accounts, including @24ELECTIONS on X (formerly Twitter), speculated that Kamala Harris wore Nova H1 Audio Earrings during the debate. These earrings, developed by German startup NOVA Products, are said to project sound directly into the wearer’s ear via Bluetooth, making them a discreet communication device. The technology, which debuted at CES 2023, has been described as an advanced solution for making phone calls or listening to audio without anyone noticing.

However, an online search for these earrings shows that they are not available for purchase, and the company that originally developed them seems to have gone dark. The website for NOVA Products now redirects to Icebach Sound Solutions, raising questions about whether the product ever truly existed.

No Proof, Just Speculation

As of now, there is no concrete evidence that Kamala Harris was wearing Nova H1 Audio Earrings. Video footage from the debate shows her wearing a pair of pearl earrings, but there’s no indication that they were anything more than traditional jewelry. In fact, the earrings bear a strong resemblance to Tiffany & Co.’s Double Pearl Hinged Earrings, which Harris has been seen wearing in the past.

Conservative social media users have fanned the flames of this conspiracy, with posts on platforms like Reddit and X claiming that Harris was secretly receiving audio assistance during the debate. Despite the buzz, there has been no confirmation from official sources or credible media outlets. Newsweek reached out to Harris’s team for comment but has yet to receive a response.

Nova H1 Audio Earrings: Fact or Fiction?

The Nova H1 Audio Earrings were initially launched on Kickstarter, promising a discreet way to listen to music or take calls. According to the campaign, the earrings were designed to “project sound into the ear canal” while remaining visually indistinguishable from regular pearl earrings. However, backers of the product have expressed concerns about the company’s legitimacy. Some users left comments on the Kickstarter page over a year ago, asking for updates on their orders, which never materialized.

The last update from the company on Kickstarter was in May 2023, and since then, NOVA Products has gone silent. Icebach Sound Solutions, which appears to have taken over the project, has only made cheeky responses to media inquiries, further fueling skepticism. One response on their website states that they will only give interviews to Jimmy Fallon, adding a layer of irreverence to an already bizarre situation.

Conspiracy Theories in Presidential Debates: A Recurring Theme

This is not the first time conspiracy theories have emerged during a presidential debate. Similar accusations were made against Hillary Clinton during the 2016 election, when conservative media claimed she wore an earpiece to receive coaching during a televised forum. Fact-checking websites like Snopes and PolitiFact debunked these claims, pointing out that there was no evidence to suggest Clinton used any hidden communication devices.

During the 2020 election, Joe Biden faced similar accusations. Some conservative figures alleged that he wore a hidden earpiece during his debate with Donald Trump, though these claims were also proven false by fact-checkers.

International

New X Feature Reveals Where Users Really Are — And It’s Already Causing Chaos

November 25, 2025 – In a move to enhance transparency and curb the spread of bots and foreign influence on its platform, X (formerly Twitter) has launched a new “About This Account” feature that displays the country or region associated with user profiles. The tool, which rolled out widely in recent weeks, allows anyone to quickly check the origin of an account by tapping or hovering over the “Joined” date on a profile page. This comes amid growing concerns over online authenticity, especially in politically charged discussions.The feature pulls data from where the account was created or where it’s primarily active, providing a simple label like “United States” or “Europe.” X owner Elon Musk has touted it as a key step toward building trust, stating on the platform that it helps “expose fake accounts and foreign agitators.” Early adopters have praised it for its role in “instant bot detection,” with users reporting discoveries of profiles masquerading as locals but originating from distant regions.

Rollout and Initial Impact

The “About This Account” section was first tested on X employee profiles in October before a full launch in mid-November. However, the rollout wasn’t without hiccups—X briefly pulled the feature just hours after debut due to immediate backlash over privacy concerns, only to reinstate it shortly after. Since then, it has become a permanent fixture, also revealing additional details such as username change history and any connections to X’s premium services.The tool has already sparked heated debates. Proponents argue it reduces misinformation by highlighting potential foreign meddling; for instance, several accounts promoting political narratives, including pro-Palestinian voices and MAGA supporters, have been exposed as operating from countries like Pakistan, Bangladesh, Israel, or even Gaza—contradicting their portrayed identities. One viral thread highlighted “Gaza journalists” based overseas, fueling discussions about propaganda and influence operations.Critics, however, raise alarms about privacy and potential misuse. Some users worry it could lead to doxxing or harassment, especially for those in sensitive regions. Others note that savvy users might evade detection using VPNs, though X claims it’s refining its algorithms to improve accuracy. In response to feedback, the platform allows users to opt for a broader regional display (e.g., “Asia” instead of a specific country) to balance transparency with privacy.

Broader Implications for Social Media

This feature aligns with X’s ongoing efforts under Musk to prioritize free speech while tackling spam and inauthenticity. It arrives at a time when social platforms face scrutiny from regulators worldwide, including the EU’s Digital Services Act, which demands greater accountability for content moderation. Analysts suggest it could influence competitors like Meta’s Threads or Bluesky to adopt similar tools, potentially reshaping how users verify online identities.As global events like elections and conflicts amplify the role of social media, features like this could play a pivotal role in distinguishing genuine discourse from orchestrated campaigns. “It’s a double-edged sword,” says digital ethics expert Dr. Lena Vasquez in a recent interview. “While it promotes accountability, it also risks oversimplifying complex user behaviors in a borderless internet.”How to Use the “About This Account” FeatureAccessing and utilizing this tool is straightforward, making it accessible for everyday users to verify profiles. Here’s a step-by-step guide:

- View a Profile’s Location:

- Navigate to any user’s profile on X via the web, mobile app (iOS or Android), or desktop.

- Look for the “Joined [Date]” line below the user’s bio and profile picture.

- Tap (on mobile) or hover your cursor (on desktop) over the “Joined” date. A pop-up or expanded section titled “About This Account” will appear, showing the associated country or region.

- Check Additional Details:

- In the same pop-up, you’ll see username history (if changed) and any links to X services, like premium subscriptions.

- This is useful for spotting suspicious accounts—if a profile claims to be from one place but shows another, it might warrant further scrutiny.

- Customize Your Own Display (for Privacy):

- Go to your account settings on X.

- Under “Privacy and Safety,” find the “Account Information” or “Transparency” section (exact labeling may vary by update).

- Toggle the option to show a broader region instead of your specific country. Note: This doesn’t hide the data entirely but generalizes it (e.g., “North America” vs. “Canada”).

- Tips for Effective Use:

- Combine with other verification methods: Check for blue checkmarks (indicating verified status), post history, and engagement patterns.

- Report suspicious accounts via X’s built-in tools if you suspect bots or misinformation.

- Be mindful of privacy—avoid using this to harass others, as X’s policies prohibit doxxing.

While not foolproof, this feature empowers users to make more informed decisions about who they engage with online. As X continues to evolve, expect potential expansions, such as integrating AI-driven authenticity checks. For the latest updates, keep an eye on X’s official announcements or Musk’s posts.

education

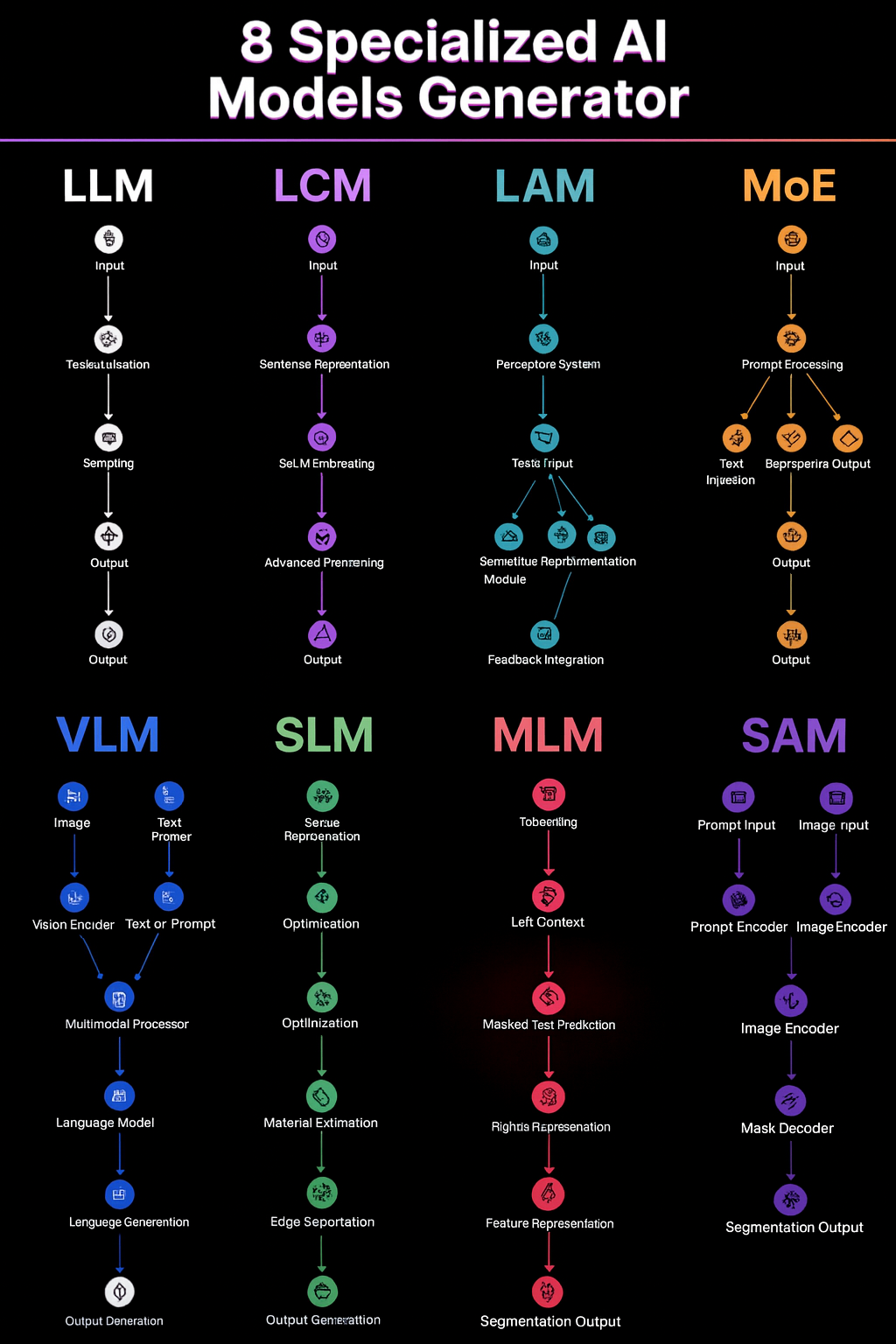

AI’s Next Era: Orchestrating Specialists, Not One Big Model

AI isn’t “one giant model for everything” anymore. The fastest progress is coming from specialized models—each excellent at a narrow slice of the problem—and smart integration that routes the right task to the right model. Here’s a clear, practical tour of the landscape and how to use it.

Why specialization beats one-size-fits-all

- Latency & cost: Smaller or task-specific models respond in milliseconds and are cheap to run; giant generalists aren’t.

- Accuracy on niche tasks: A focused vision model or segmenter will often beat a general LLM + prompt tricks.

- Deployment reality: Some workloads must run on device (privacy, offline) or at the edge (robots, cameras).

- Composable systems: Orchestrating multiple models lets you blend strengths—reason with one, perceive with another, act with an agent.

The roster (what each acronym really means)

🔹 LLM — Large Language Model

- What it is: A generalist text model for reasoning, content generation, coding help, retrieval-augmented Q&A.

- Strengths: Broad world knowledge, chain-of-thought reasoning, tool use via function calling.

- Limits: Slower and costlier than small models; can hallucinate; not great at fine visual detail.

🔹 LCM — Latent/Lightweight Consistency Model (compact diffusion)

- What it is: A diffusion-style model reworked for very fast image generation/upscaling.

- Strengths: Few inference steps → near-real-time visuals; great for product mockups, ads, thumbnails.

- Limits: Narrow domain (images/video); text/logic still needs an LLM.

🔹 LAM — Language/Logic Agents

- What it is: Planners/executors that call tools, browse, write code, schedule jobs, and evaluate results.

- Strengths: Turns model outputs into actions; automates multi-step workflows with guardrails.

- Limits: Needs good tools, memory, and evaluation loops; careless agents can “run away.”

🔹 MoE — Mixture of Experts

- What it is: A big model built from many “experts”; a router activates only a few per token.

- Strengths: Scales capacity without paying the full compute cost every step; good for multilingual/heterogeneous tasks.

- Limits: Harder to train/serve; quality depends on good routing.

🔹 VLM — Vision-Language Model

- What it is: Multimodal models that read images (and often video) + text.

- Strengths: Screenshot Q&A, chart understanding, document analysis, UI testing, visual troubleshooting.

- Limits: Still learning fine text in images, small fonts, edge cases; may need OCR aids.

🔹 SLM — Small Language Model

- What it is: Compact LLM (10B parameters or less) for edge/on-device work.

- Strengths: Low latency, private by default, runs on laptops/phones/IoT; great for autocomplete and local assistants.

- Limits: Narrower knowledge, weaker long-form reasoning; often paired with retrieval.

🔹 MLM — Masked Language Model (e.g., BERT-style)

- What it is: Pretraining objective that predicts missing tokens; great encoder for classification/search.

- Strengths: Semantic search, topic labeling, PII detection, entity extraction; fast and stable.

- Limits: Not generative; pair with an LLM when you need prose or code.

🔹 SAM — Segment Anything Model

- What it is: Foundation segmentation for images; pick out objects, regions, people—no labels needed.

- Strengths: Annotation at scale, medical pre-segmentation, retail shelf parsing, industrial inspection.

- Limits: Doesn’t “understand” the object class; combine with a classifier/VLM for semantics.

Quick chooser: which model for which job?

| Goal | Best fit | Why |

|---|---|---|

| Long answers, reasoning, coding help | LLM | Broad knowledge + tool use |

| Instant images or edits | LCM | Few steps → fast + cheap |

| Automate multi-step tasks | LAM (agent) | Plans, calls APIs, checks results |

| Scale quality across domains | MoE | Capacity without full compute |

| Screenshot / PDF / chart Q&A | VLM | Multimodal grounding |

| Private, on-device assistant | SLM | Low latency + privacy |

| Search, classify, extract entities | MLM | Strong encoder semantics |

| Cut objects out of images | SAM | Robust, label-free segmentation |

How they work together (a simple blueprint)

User request → Router → Orchestrator (Agent) → Tools/Models → Verifier → Answer

- Router tags the task (vision, search, segmentation, write).

- Agent (LAM) plans steps and calls:

- VLM to read a screenshot,

- SAM to isolate a component,

- MLM to extract fields,

- LLM/SLM to explain or draft,

- LCM to render a visual.

- Verifier/critic (could be a second small model or rules) checks safety, facts, or formatting.

- Response is returned; artifacts (images, JSON) are attached.

This “specialization + integration” pattern beats any single model on speed, cost, and reliability.

Design trade-offs you’ll actually feel

- Latency: SLMs and LCMs are sub-second; large LLMs are not.

- Privacy: On-device SLM + local VLM can keep data off the cloud.

- Accuracy: Domain tasks (vision, segmentation, extraction) usually win with VLM/SAM/MLM over prompting a general LLM.

- Cost control: Use SLM/MLM for 80% of routine work; escalate to a larger LLM only when needed.

- Maintenance: More moving parts → add observability (per-model metrics, routing logs, error budgets).

Evaluation playbook (keep it simple)

- Define slices: e.g., “OCR-heavy PDFs,” “charts,” “legal text,” “UI screenshots.”

- Pick metrics: EM/F1 for extraction (MLM), IoU for segmentation (SAM), latency & cost per call, human preference for LLM outputs.

- A/B the router: Measure when it sends tasks to the “expensive” model—can a small model handle it?

- Guardrails: Safety filters, citation checks (for RAG), and a lightweight self-check pass on critical outputs.

Three mini-patterns you can borrow

- Help desk with eyes: VLM reads user screenshots → SAM crops error dialog → LLM writes the fix; average handle time drops, fewer back-and-forths.

- Catalog cleanup: SAM segments product photos → VLM describes → MLM tags → LCM generates clean hero images.

- Private coding copilot: SLM runs on the developer’s laptop (context from local repo) → MoE backend only for hard refactors.

Getting started (no drama, just steps)

- Map your top 5 tasks and their constraints (privacy, latency, budget).

- Start with one specialist beside your LLM (e.g., VLM for screenshots, or MLM for extraction).

- Add a tiny router (heuristics at first) and log decisions.

- Introduce an agent once you have 2–3 tools to chain.

- Instrument everything: latency, cost, success rate, fallback counts.

- Iterate—promote frequent fallbacks to first-class tools, demote what you don’t use.

The bottom line

The future of AI isn’t a bigger hammer. It’s a toolbox:

- LLMs for reasoning,

- VLM/SAM for seeing,

- MLM for knowing,

- LCM for drawing,

- SLM for speed and privacy,

- LAM to coordinate,

- MoE to scale.

Specialization + integration is how you get real-world performance.

“The future of AI isn’t a bigger model—it’s a better orchestra.

LLMs reason, VLMs see, SAM segments, MLMs extract, SLMs protect privacy at the edge, and agents coordinate the flow.

Real performance comes from routing the right task to the right specialist and measuring the system end-to-end.”

— El Mostafa Ouchen, cybersecurity author and analyst

data breaches

Hardware-Level Cybersecurity: How to Stop Root-of-Trust Exploits

Secret Blizzard’s embassy campaign shows why device trust beats TLS trust alone—and how to harden firmware, keys, and boot chains

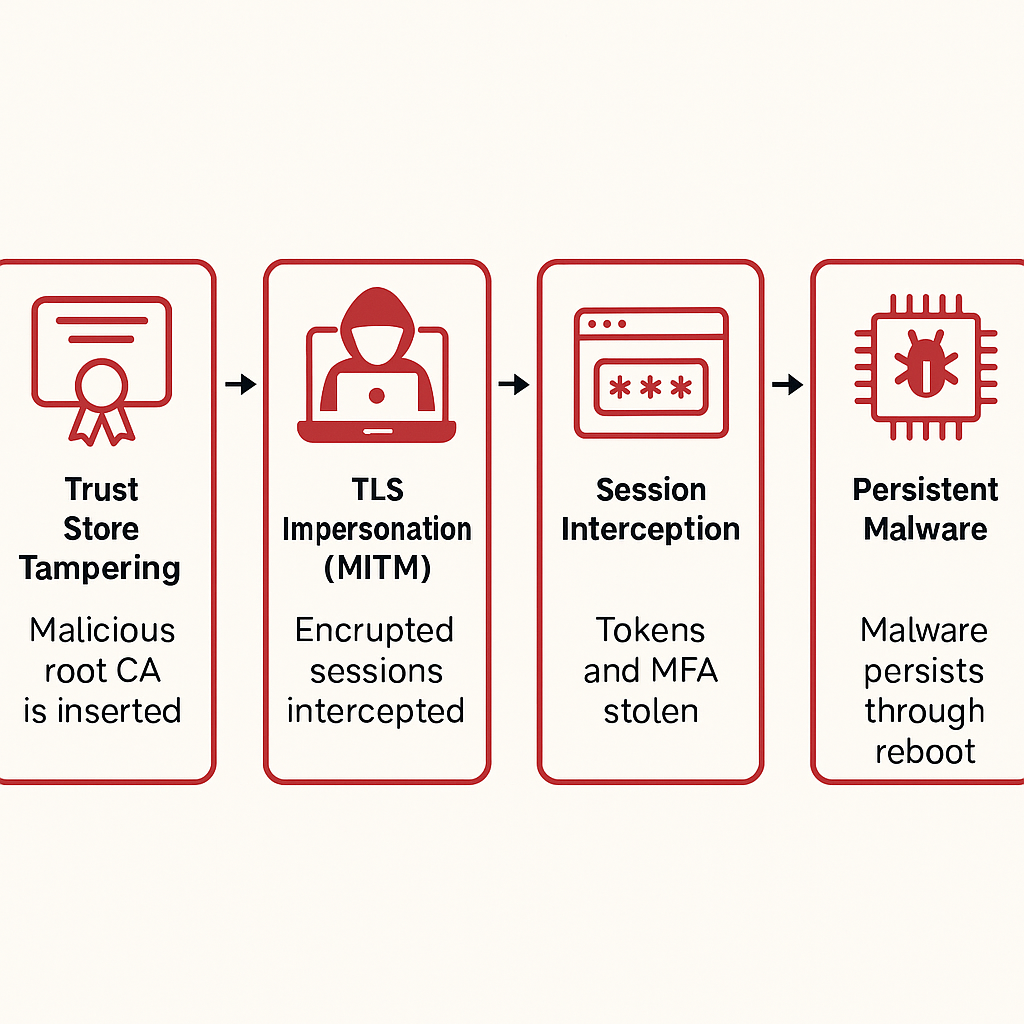

A new expert analysis warns that root-of-trust (RoT) compromises can neutralize MFA and FIDO protections by subverting certificate trust and boot integrity. The campaign—linked to Secret Blizzard—demonstrates that once a system’s trust anchor is controlled, attackers can man-in-the-middle “secure” sessions and persist below the OS. This guide details practical defenses and standards-based controls.

A recent case study shows Russian-backed Secret Blizzard bypassing MFA at foreign embassies by tampering with the root of trust—the very mechanism devices use to decide what (and whom) to trust online. Instead of phishing credentials, attackers inserted a rogue root certificate and intercepted encrypted traffic without warnings, proving that TLS-anchored MFA fails when the device’s trust store is compromised.

What Happened

- Attack essence: Control the victim’s local trust anchor (root CA / trust store) → impersonate sites via MITM → harvest tokens, cookies, and MFA flows without browser alerts.

- Why it matters: FIDO/WebAuthn assume authentic TLS. If TLS validation is subverted, MFA loses its authenticity check.

- Who’s at risk: Any org that relies solely on TLS + MFA without device-bound credentials, firmware integrity, and independent attestation—especially governments, cloud operators, finance, and enterprises with high-risk network locales.

“A root-of-trust compromise undermines all TLS-based protections, including FIDO-based MFA.” — The Hacker News expert analysis summarizing the campaign. The Hacker News

“Platform firmware must be protected, corruption detected, and recovery ensured in the event of compromise.” — NIST SP 800-193 (Platform Firmware Resiliency). NIST Publications

“Treat firmware and trust stores as live attack surfaces. Bind credentials to hardware, enforce measured boot, and continuously attest device state—or assume your MFA can be silently routed.” — El Mostafa Ouchen, cybersecurity author and analyst.

Technical Deep Dive

1) Root-of-Trust Attack Flow

- Trust Store Tampering: Adversary adds a malicious root CA or manipulates the device PKI.

- TLS Impersonation (MITM): The attacker issues leaf certs for target domains. The browser accepts them because the rogue root is trusted.

- Session Interception: Harvest SAML/OIDC tokens, cookies, and challenge/response flows—even with WebAuthn/FIDO—because the browser “thinks” it’s talking to the real site.

2) Why Firmware & Boot Matter

Above the OS, EDRs and browsers can’t see a poisoned trust anchor set during early boot or via privileged management engines. UEFI/firmware persistence was proven feasible by LoJax, the first in-the-wild UEFI rootkit, showing long-lived pre-OS footholds.

3) Controls That Actually Help

- Device-bound, non-exportable keys (TPM/Secure Enclave/Pluton): Keys never leave hardware; sign-in requires the physical device.

- Measured & Verified Boot: Record each boot stage in hardware and verify with policies; quarantine on mismatch. Follow NIST SP 800-193 for protect-detect-recover.

- Independent Root of Trust for Credentials: Co-sign credentials with both the device and the identity cloud, so a tampered local trust store can’t forge identity.

- Mutual Cryptographic Verification: Device ↔️ IdP both attest to each other beyond TLS (e.g., hardware signals + cloud policy).

- Continuous Session Risk Checks: Re-evaluate device posture and revoke access mid-session on trust drift (rogue CA detected, boot log mismatch).

MITRE ATT&CK Mapping (selected)

- Initial Access: Valid Accounts via session hijack (T1078), Exploit Trusted Relationship (T1199).

- Defense Evasion: Modify Authentication Process / Subvert Trust Controls (T1556.004), Subvert Trust Controls (T1553).

- Credential Access: Web session cookie theft (T1539 via MITM).

- Persistence: Modify Boot/UEFI (T1542.003).

- Command & Control: Web protocols over TLS (T1071.001).

(IDs aligned to Enterprise matrix; exact sub-techniques vary by environment.)

Impact & Response

Impact: Stolen sessions, bypassed MFA, and durable persistence if boot firmware is altered. Government and regulated sectors face heightened compliance and reporting exposure given device trust failures.

Immediate actions (step-by-step):

- Inventory & lock trust stores: Alert on new root CAs; require admin-approval workflows + logging for CA changes.

- Turn on measured/verified boot across fleets; export boot measurements to an attestation service.

- Bind credentials to hardware: Enforce TPM/Secure Enclave/Pluton-backed keys; disable fallbacks to exportable secrets.

- Session protection: Short-lived tokens, continuous re-auth on posture drift, and token binding where available.

- Firmware discipline: Apply OEM updates; enable write protection on SPI flash; require signed UEFI capsules; monitor for UEFI variable anomalies.

- Isolation on suspicion: If rogue CAs or boot mismatches are detected, block access, capture measurements, and route the device to firmware re-flash / secure recovery.

Background & Context

- Real-world precedent: LoJax proved UEFI persistence in the wild (Sednit/Fancy Bear), making below-OS implants a practical threat.

- Raising the baseline: Vendors are pushing hardware roots like Microsoft Pluton to bring TPM-class security inside the CPU and enable simpler, updateable attestation at scale.

What’s Next

Expect wider adoption of hardware-anchored identity, token binding, and continuous device attestation—and likely policy mandates in government and critical infrastructure to implement NIST’s protect-detect-recover firmware model. For defenders, the lesson is clear: move trust from the network perimeter into silicon.

Root-of-Trust (RoT) Defense Checklist for CISOs & IT Teams

1. Firmware & Boot Integrity

- ✅ Enable Secure Boot + Verified Boot on all endpoints.

- ✅ Turn on measured boot and forward logs to an attestation service (e.g., Microsoft Defender ATP or custom MDM).

- ✅ Apply NIST SP 800-193 Protect–Detect–Recover guidance: enable rollback protection, watchdogs, and signed firmware updates.

2. Credential Binding

- ✅ Require TPM/Secure Enclave/Pluton for storing keys (disable exportable software keys).

- ✅ Enforce device-bound FIDO2 credentials in identity providers (Azure AD, Okta, Google Workspace).

- ✅ Turn off legacy MFA fallback (e.g., SMS or OTP that bypass hardware).

3. Trust Store & Certificates

- ✅ Monitor for rogue root certificates in Windows/Mac/Linux trust stores.

- ✅ Enforce admin-only CA installs with logging and SIEM integration.

- ✅ Run weekly CA inventory scans; alert on any unauthorized roots.

4. Session Protection

- ✅ Enable token binding where supported (ties session to device).

- ✅ Enforce short-lived tokens (e.g., 10–15 min for critical apps).

- ✅ Turn on continuous risk evaluation—revoke sessions on CA mismatch or boot measurement drift.

5. Supply-Chain & Device Controls

- ✅ Use OEM-signed firmware only; enable capsule update verification.

- ✅ Lock SPI flash where hardware supports it.

- ✅ Segment management engines (iLO, iDRAC, BMC) into separate VLANs with strict ACLs.

6. Incident Response Playbook

- ✅ Isolate any device with trust-store anomalies or boot log mismatch.

- ✅ Re-flash firmware with vendor images, not OS reinstalls (malware may survive).

- ✅ Rotate all keys and certificates tied to that device.

- ✅ Conduct a forensic review of boot/firmware logs for persistence artifacts.

📌 Pro Tip from El Mostafa Ouchen:

“Defenders must treat trust anchors—firmware, secure boot, TPMs—not as passive baselines but as active attack surfaces. Building continuous attestation pipelines is the only way to catch RoT drift before adversaries turn MFA into a bypassed formality.”

Sources:

- The Hacker News — Expert Insights (Aug 18, 2025): Secret Blizzard’s RoT attack path and countermeasures (device-bound credentials, independent roots, mutual verification, continuous checks). The Hacker News

- NIST SP 800-193 (2018): Platform Firmware Resiliency—protect, detect, recover model; measured/verified boot guidance. NIST Publications

- ESET (LoJax, 2018): First in-the-wild UEFI rootkit demonstrating pre-OS persistence risk. web-assets.esetstatic.comwelivesecurity.com

- Microsoft Pluton (2025 docs): Silicon-level root of trust, TPM 2.0 functionality, and updateable hardware security. Microsoft Learn+1TECHCOMMUNITY.MICROSOFT.COM